Contributors: Sushant Agarwal, Sherin Eapen, Mansi Upadhyay, Ashu Srivastav, Hita Garapati, Neha Kulkarni, Jitesh K. Pillai, Uzma Saeed, Puneet Saxena, Chandra Sekhar Pedamallu

Date : Mar’25

With advancements in computing infrastructure and big data availability, Artificial Intelligence (AI) is evolving rapidly, expanding its role across diverse domains, including biopharma and healthcare. In particular, deep learning models like U-Net are transforming critical applications such as biomedical image segmentation and medical imaging. From diagnosing diseases to suggesting personalized treatments, AI has steadily gained the trust of experts and prominent regulatory authorities such as the FDA. Given the growing concerns in oncology, its potential is being actively explored, especially for early cancer detection.

Recently, medical imaging has become one of the most effective tools for cancer detection, reducing reliance on complex biopsy procedures. While our earlier whitepaper detailed various biomedical image analysis tools used in cancer detection and treatment and the overarching benefits of AI/ML, this blog focuses specifically on U-Net and its foundational role in advancing medical imaging.

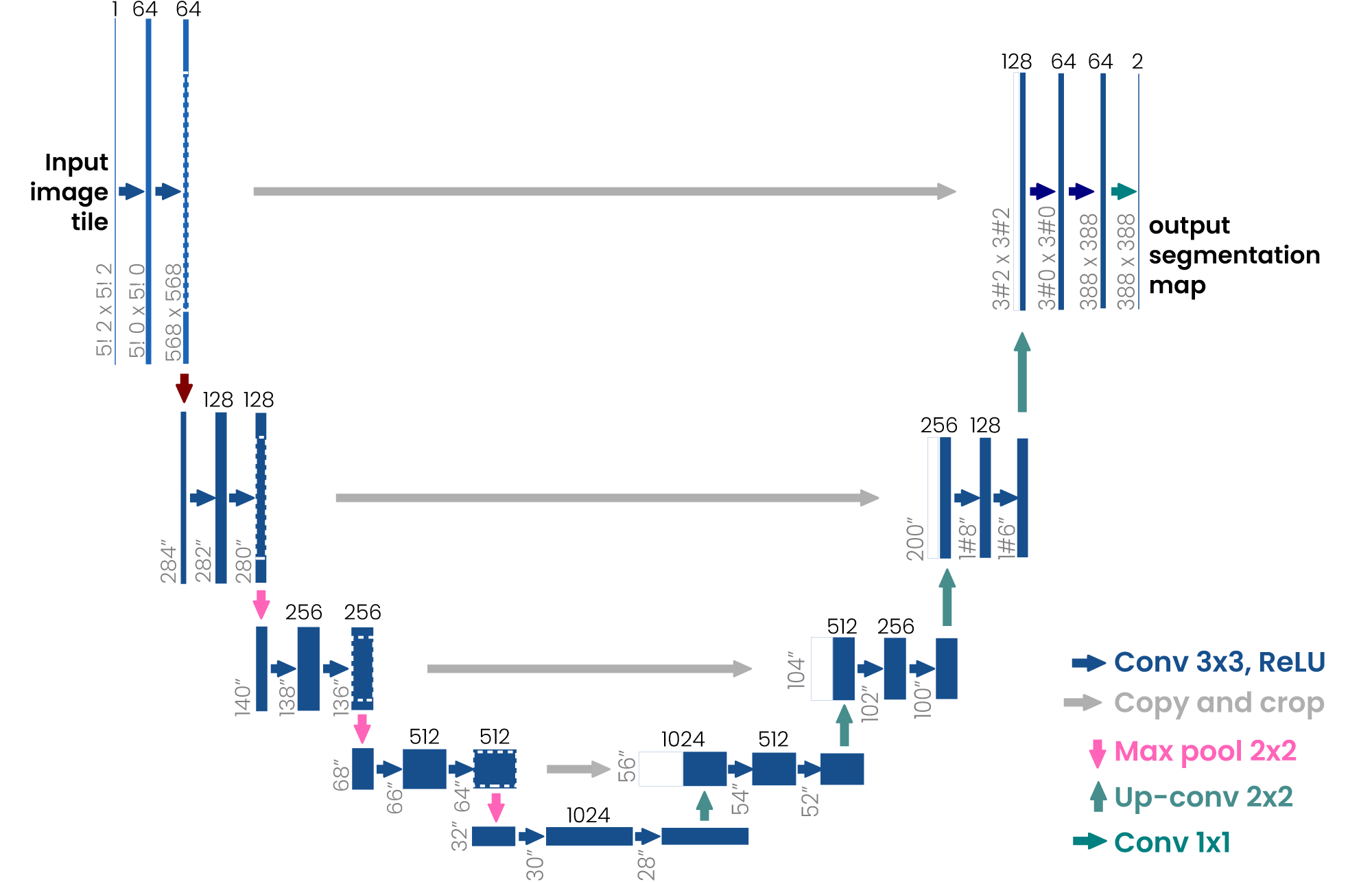

The U-Net architecture (Fig. 1), originally proposed by Olaf Ranneberger and colleagues in 2015 for biomedical image segmentation, quickly became a cornerstone in medical imaging. Its primary strength lies in its ability to generate precise, pixel-level segmentations from relatively small datasets—an essential feature in medical applications. U-Net’s symmetric encoder-decoder architecture, coupled with deep learning-based skip connections, allows it to simultaneously capture global context and fine-grained details. This unique capability has established U-Net as a preferred model for various biomedical image segmentation tasks, extending beyond medicine to areas such as satellite imaging, object detection, and more.

Figure 1: Basic U-net architecture. The arrows represent the different operations, the blue boxes represent the feature map at each layer, and the gray boxes represent the cropped feature maps from the contracting path [1].

Key Features of U-Net in Medical Imaging

- Encoder-Decoder Architecture: U-Net follows a symmetric U-shaped architecture. The encoder path consists of repeated convolution and max-pooling layers, which reduce the spatial resolution while increasing feature depth. The decoder path mirrors the encoder, using up-sampling layers to restore the original resolution.

- Skip Connections: The skip connections in U-Net link corresponding layers in the encoder and decoder paths, allowing the model to retain high-resolution features from earlier layers. This is crucial for improving segmentation accuracy, as it helps the model preserve spatial details that might otherwise be lost during down-sampling.

- Fully Convolutional Network (FCN): U-Net is a fully convolutional network, meaning it does not contain any fully connected layers, allowing it to handle variable image sizes. This also reduces the number of parameters compared to models that use fully connected layers, making U-Net more efficient.

- Data Augmentation: One of U-Net’s key strengths lies in its use of aggressive data augmentation strategies. By augmenting the training data with rotations, translations, and other transformations, U-Net can generalize well even when the training dataset is small, which is often the case in biomedical applications.

Advantages of U-Net

- High Accuracy on Limited Data: U-Net’s ability to perform well with small, annotated datasets is one of its most significant advantages. The model’s architecture is designed to maximize the use of available data through data augmentation and careful feature map handling.

- Precise Segmentation: The use of skip connections allows U-Net to produce highly detailed segmentations. This is particularly important in medical imaging, where accurately delineating boundaries (e.g., between tumors and healthy tissue) is critical.

- Versatility: Although U-Net was originally designed for biomedical segmentation, its utility extends far beyond. It has been successfully applied in fields such as satellite imagery, environmental monitoring, and more, showcasing its versatility.

- Fast Convergence: Due to its efficient architecture and the inclusion of skip connections, U-Net tends to converge quickly during training, often requiring fewer epochs than other models for similar tasks.

Applications of U-Net in Healthcare & Beyond

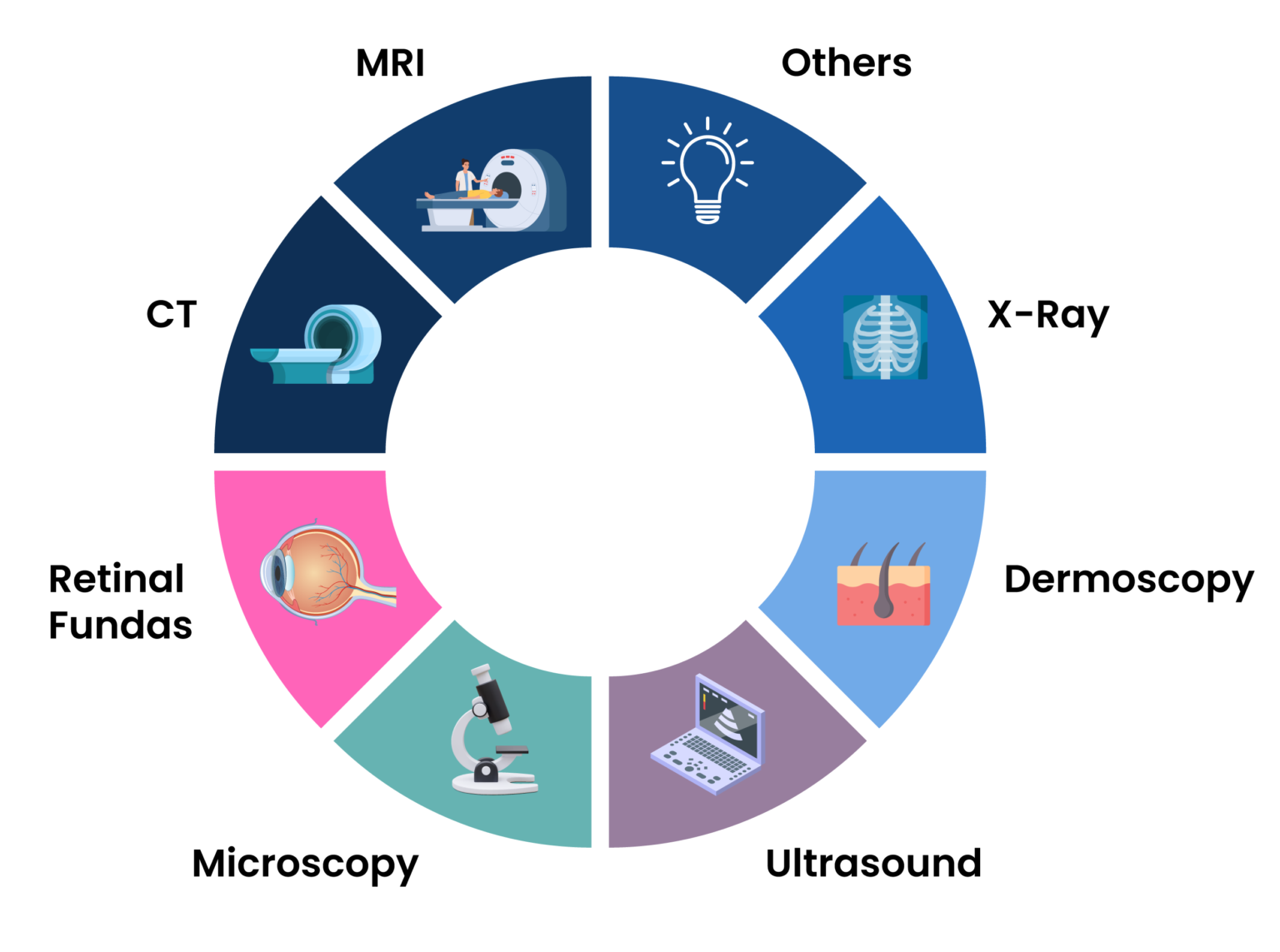

U-Net has found applications in various domains where pixel-level precision is essential (Fig 2). Its impact on medical imaging is the most well-known, with use cases such as segmenting organs, tumors, or lesions from MRI, CT, or histopathology images. The model has also been applied to tasks in computer vision such as defect detection in manufacturing, autonomous driving (e.g., road segmentation), and even agriculture (e.g., plant disease detection).

Fig 2: Various image modalities that U-Net is capable of handling.

Variants of U-Net

When examining U-Net and its variants, it becomes clear that while the advancements in architectures like 3D U-Net and U-Net++ bring substantial improvements, they also raise certain concerns about complexity and generalization. These models, though more powerful, come with their own set of trade-offs, especially in terms of computational overhead and application-specific suitability.

3D U-Net: Powerful but Overly Specialized?

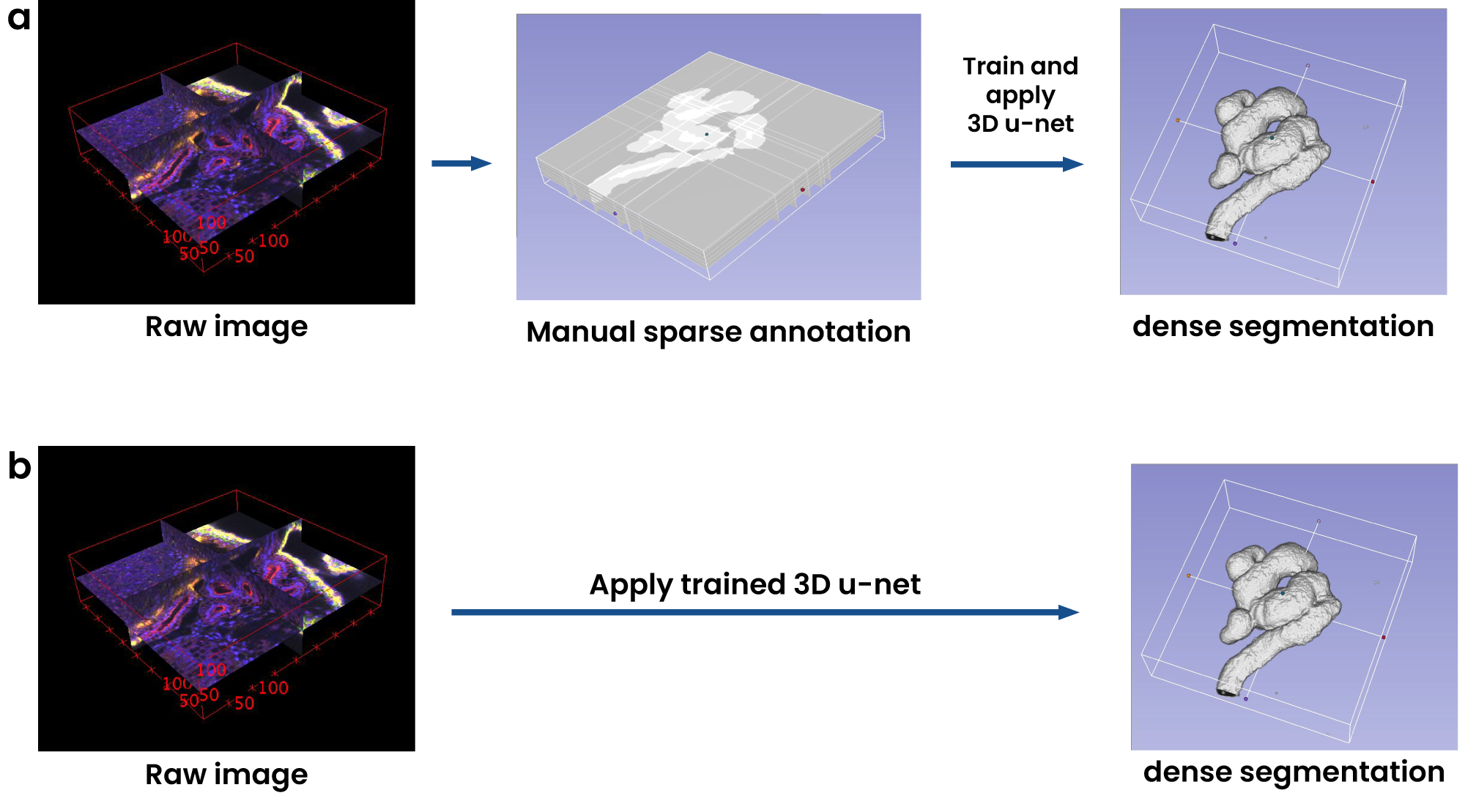

The 3D U-Net (Fig 3) represents a substantial leap in volumetric segmentation, allowing deep learning models to process 3D data more effectively. Its architecture, a natural extension of the traditional U-Net, is meticulously designed to handle volumetric inputs through 3D convolutions and corresponding operations. However, the introduction of such architectural complexity might not always result in generalized improvements across all applications. While medical imaging, particularly tasks involving MRI and CT scans, clearly benefits from this enhancement, the specificity of 3D U-Net’s design could hinder its adaptability to more generic tasks. Its reliance on 3D convolutions and volumetric data makes it a niche model—highly effective but overly specialized.

Fig 3: Architecture of 3D U-Net [2].

Furthermore, the 3D U-Net’s weighted softmax loss function, which is specifically designed to work with sparse annotations, sounds promising. Yet, in practice, this feature often leads to performance drops when the annotated data isn’t abundant or sufficiently representative. This raises questions about the robustness of the model in scenarios where high-quality annotations are limited.

For example, in the field of tumor segmentation, understanding the 3D structure of a tumor from volumetric MRI or CT scans is crucial. The 3D U-Net excels here, producing remarkably detailed segmentations that aid in early diagnosis and treatment planning. However, while the model is proficient in capturing spatial relationships within medical volumes, its utility remains confined largely to domains where annotated 3D data is abundant, and the computation required for such tasks is available. This limits its broader applicability, raising the question of whether simpler models might yield comparable results at a lower cost.

Fig 4: Volumetric segmentation using 3D U-Net.

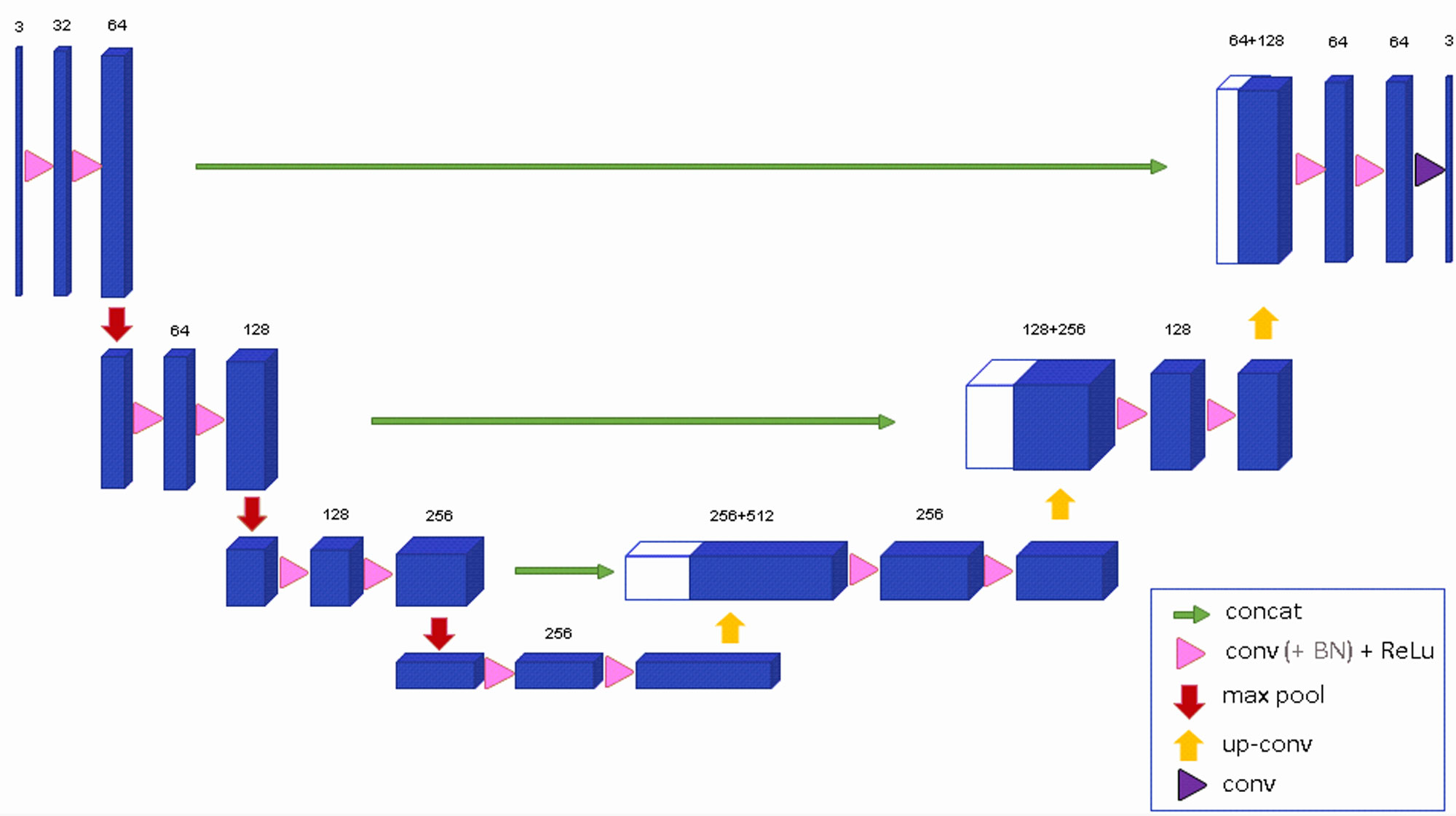

U-Net++: Enhanced Flexibility, But at What Cost?

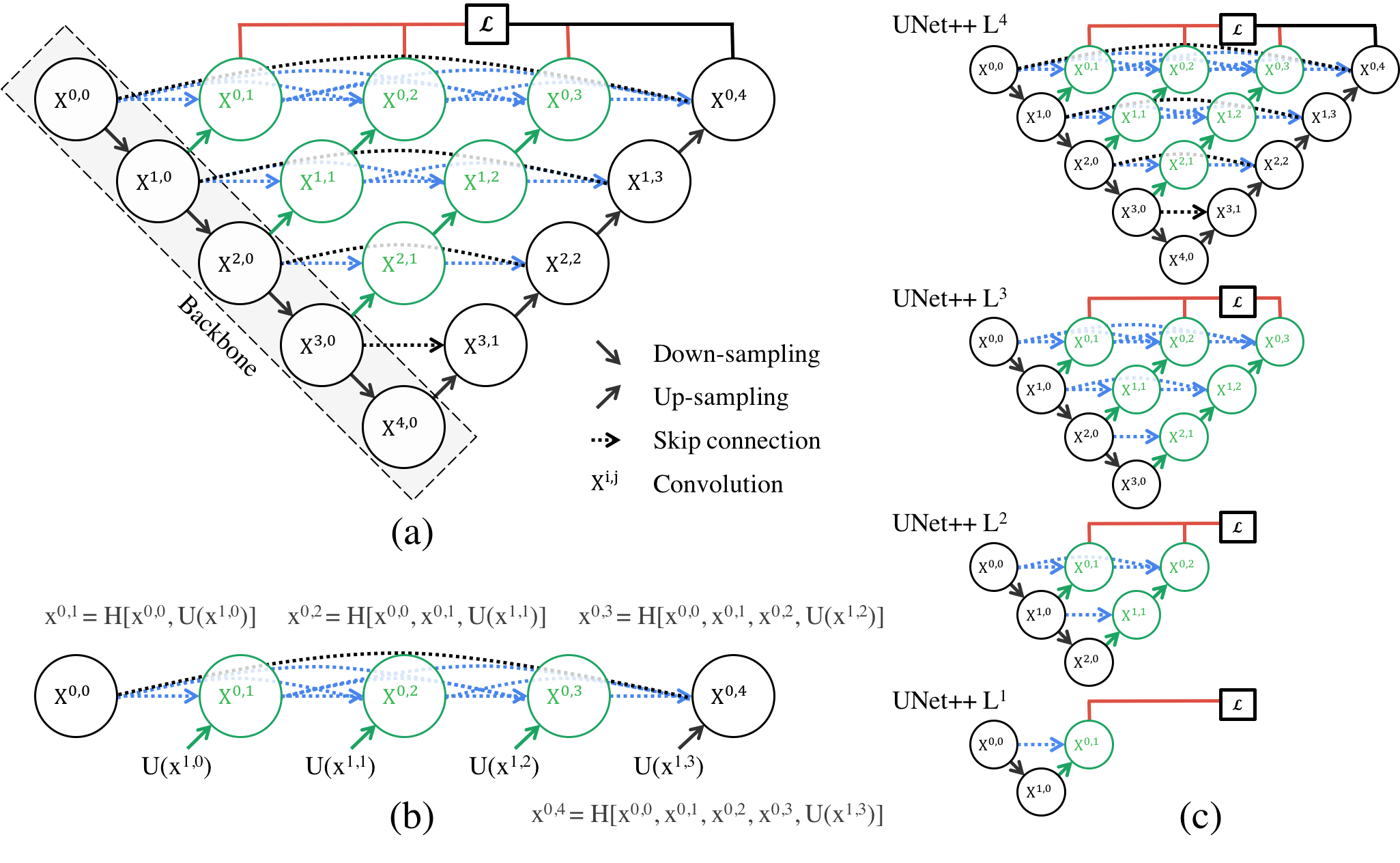

U-Net++ (Fig 5), on the other hand, aims to address some of the limitations of the original U-Net, particularly when handling heterogeneous data where object size and complexity vary greatly. With its dense skip connections and redesigned pathways, U-Net++ improves the interaction between encoder and decoder, ensuring that more semantically rich feature maps are transferred. This architectural revision undeniably enhances the model’s ability to capture intricate details in segmentation tasks, especially in medical imaging. Yet, the increased depth and complexity come at a cost.

The feature-rich pathways of U-Net++ allow it to tackle more challenging segmentation tasks effectively. However, these nested connections and dense convolutions result in a model that is difficult to optimize. While the model’s deep supervision can potentially improve performance by leveraging multiple semantic levels, it also adds to the model’s complexity, particularly in training. The “Accurate Mode” versus “Fast Mode” dilemma reflects this trade-off between performance and speed. While the former enhances robustness, it can be computationally expensive, while the latter sacrifices accuracy for efficiency—forcing practitioners to choose between speed and precision depending on their task.

Fig 5: Architecture of U-Net++ [3].

U-Net++ shines in medical applications, and one particularly noteworthy domain is lesion detection in dermatology. Detecting and segmenting lesions, such as those associated with skin cancer, poses a significant challenge due to the wide variety of shapes, sizes, and textures that lesions can exhibit. U-Net++ is particularly well-suited for this task. Its ability to capture fine-grained details and process objects of varying sizes makes it a powerful tool in this field. The architecture’s deep supervision ensures that smaller lesions are not overlooked, while its nested skip connections provide robustness in cases where lesion boundaries are unclear. By refining segmentation in a medical context, U-Net++ has the potential to substantially improve diagnostic outcomes, particularly in early-stage detection of diseases like melanoma.

Conclusion

While both 3D U-Net and U-Net++ offer clear advancements over the original U-Net, their effectiveness depends largely on the specific task at hand. The 3D U-Net’s strong performance in volumetric segmentation makes it invaluable in medical imaging, particularly for tasks like brain tumor segmentation. Yet, its niche design and high computational costs may limit its broader applicability. Similarly, U-Net++, with its ability to capture more nuanced semantic information through dense skip connections, excels in tasks like lesion detection but introduces significant overhead in terms of complexity and training time.

As these models become more complex, the trade-offs between accuracy, speed, and resource demands must be carefully considered. While they represent exciting advancements in segmentation architectures, their practical utility may still depend heavily on the constraints of the specific use case, from computational resources to the availability of annotated data.

References

- Stahl PL, Salmen F, Vickovic S, et al. Visualization and analysis of gene expression in tissue sections by spatial transcriptomics. Science 353(6294):78–82 (2016).

- Rao A, Barkley D, Franca GS, Yanai I, Exploring tissue architecture using spatial transcriptomics, Nature 596 211–220 (2021).

- Garcia-Alonso L, Lorenzi V, Mazzeo CI, Alves-Lopes JP, Roberts K, Sancho-Serra C, Engelbert J, Mareckova M, Gruhn WH, Botting RA, Li T, Crespo B, van Dongen S, Kiselev VY, Prigmore E, Herbert M, Moffett A, Chedotal A, Bayraktar OA, Surani A, Haniffa M, Vento-Tormo R, Single-cell roadmap of human gonadal development, Nature 607 540–547 (2022).

- Liu C, Li R, Li Y, Lin X, Zhao K, Liu Q, Wang S, Yang X, Shi X, Ma Y, Pei C, Wang H, Bao W, Hui J, Yang T, Xu Z, Lai T, Berberoglu MA, Sahu SK, Esteban MA, Ma K, Fan G, Li Y, Liu S, Chen A, Xu X, Dong Z, Liu L, Spatiotemporal mapping of gene expression landscapes and developmental trajectories during zebrafish embryogenesis, Cell 57, 1284–1298 e1285 (2022).

- Maynard, K.R., Collado-Torres, L., Weber, L.M. et al. Transcriptome-scale spatial gene expression in the human dorsolateral prefrontal cortex, Nat Neurosci 24, 425–436 (2021).

- Satilmis B, Sahin TT, Cicek E, Akbulut S, Yilmaz S. Hepatocellular Carcinoma Tumor Microenvironment and Its Implications in Terms of Anti-tumor Immunity: Future Perspectives for New Therapeutics. J Gastrointest Cancer. Dec;52(4):1198-1205. (2021).

- Wu Y, Yang S, Ma J, Chen Z, Song G, Rao D, Cheng Y, Huang S, Liu Y, Jiang S, Liu J, Huang X, Wang X, Qiu S, Xu J, Xi R, Bai F, Zhou J, Fan J, Zhang X, Gao Q, Spatiotemporal immune landscape of colorectal cancer liver metastasis at single-cell level, Cancer Discov. 12 134–153 (2022).

- Zhao N, Zhang Y, Cheng R, Zhang D, Li F, Guo Y, Qiu Z, Dong X, Ban X, Sun B, Zhao X, Spatial maps of hepatocellular carcinoma transcriptomes highlight an unexplored landscape of heterogeneity and a novel gene signature for survival, Cancer Cell Int. 22 57 (2022).

- Zugazagoitia J, Gupta S, Liu Y, Fuhrman K, Gettinger S, Herbst RS, Schalper KA, Rimm DL, Biomarkers associated with beneficial PD-1 checkpoint blockade in non-small cell lung Cancer (NSCLC) identified using high-Plex digital spatial Profiling, Cancer Res. 26 4360– 4368 (2020).

- Gouin KH, Ing N, Plummer JT, Rosser CJ, Ben Cheikh B, Oh C, Chen SS, Chan KS, Furuya H, Tourtellotte WG, Knott SRV, Theodorescu D, An N-cadherin 2 expressing epithelial cell subpopulation predicts response to surgery, chemotherapy and immunotherapy in bladder cancer, Commun. 12 4906 (2021).

Discover how U-Net is transforming biomedical image segmentation and revolutionizing medical imaging. Want to explore how AI-driven insights can enhance your research or clinical workflows? Reach out to us to learn more!